For more than 2 millennia, science has progressed primarily by experimental observations and development of theories. These two methods work perfectly in conjunction with one another in developing our understanding of the physical world around us. In the past few decades, a third significant methodology employing powerful computers and computational science for simulations has greatly added to our scientific capability and understanding.

Computational science involves recreating or simulating the physical phenomena on interest using a combination of mathematical modeling and very fast arithmetic on modern computers. Let us take the example of the engineering problem of designing the wings of an aircraft, the optimal design being the one which gives the best performance under operating constraints.

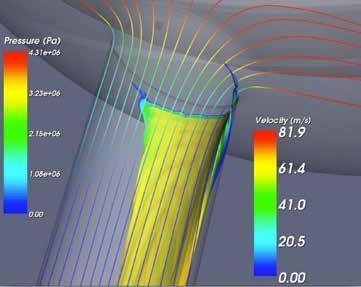

Computational science, more specifically computational fluid dynamics in the case of this application, allows engineers to simulate the performance of an aircraft wing design on computers efficiently and reach the optimal design at much lower costs. This process known as ‘numerical methods’ helps convert complex mathematical equations which represent the physics of the experiment into a set of arithmetic ones. These arithmetic equations then are solved by a computer. A typical home computer or laptop today can solve the equivalent of a few hundred billion additions, subtractions, multiplication or divisions in just one second. The fastest supercomputer in the world today can perform a mind boggling 93 million billion arithmetic operations in a second!

As computational science becomes more pervasive, more and more complex phenomena and problems are being simulated by researchers. Weather, coastal flooding from storm surges and tsunamis, flows in fuel injectors,biological phenomena like blood flow through arteries, protein formation, interaction of galaxies and stars, etc., form just the tip of the iceberg for simulation capabilities. The increase in complexity of the problems demand a continual increase in the computing power required

Today’s modern supercomputers consist of several million processors which compute simultaneously. In a very crude sense, they are essentially several million desktops / laptops put together through some smart engineering and then the problem to be solved is appropriately divided among these smaller computers which are the building blocks of the supercomputers. As problem sizes increase, the use of more of these building blocks is required and soon the ‘numerical method’, which enables the computers to use their fast arithmetic, starts hitting a wall of scalability. If a problem is divided into 100 equal parts and given to 100 different computers to solve one would expect the problem to be solved 100 times faster than a single computer calculating the whole problem by itself. In reality when the ‘numerical method’ divides the problem up, there are several overheads, one of those being communication, which do not permit the perfect linear scalability of the problem being solved 100 times faster on 100 computers. These overheads are characteristics of these ‘numerical methods’.

Our research group is working on developing new methods which aim to greatly reduce overheads which enable simulating complex physics (not hundreds but rather millions of times faster), thus utilising the maximum capabilities of the current fastest supercomputers in the world and also saturating any computing machine for the next couple of decades.

Prof. Shivasubramanian Gopalakrishnan